Recent Posts

Androidfags zara idhar aana

XHDATA D-808 DX-ing setup, analogue modulation

i don't understand

RCE on Pocketbase possible?

Shifting to linux mint

AI Impact Summit 2026

/emacs/ general

Simple Linux General /slg/ - Useful Commands editi...

/wpg/ - Windows & Powershell General

Sarvam Apology Thread

Some cool tech in my college

the hmd touch 4g

Holy Shit

Saar american companies have best privacy saaar

/desktop thread/

Forking jschan to submit a PR for captcha logic

JEEFICATION OF GSOC

4Chan bypass?

/g/ related blogpost - backup thread

Android Hygiene

My favorite game rn

COOKED

Are we getting real 5g?

I want to create my own forum but I don't know how...

Is my psu not compatible with my mobo?

/i2pg/ - I2P general

ISP Throttling

zoomies jara idhar ana

Zerodha just donated 100,000 usd to FFMPEG

Jio Fiber

/compiler_develoment/ thread

what is computer science?

just installed Arch Linux bros

Sketch - A simple 2D graphics library in C

Gemini+Figma for UI

LEARNING SPREADSHEETS

/GDG/

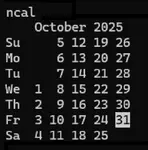

24/l2w

No.2983

How much electricity do you consume in your daily local model runs?

YMx7B4

No.2984

>>2983(OP)

I am gareeb, I don't have gpu and the one that I have in my laptop is gtx 1650 (which is good for general purpose, but not for running llm more that 2b).

yvCyyh

No.2985

>>2983(OP)

i dont use local llms as they are useless on my limited hardware

tGbf4Z

No.2986

> No GPU

> No life

tGbf4Z

No.2989

>>2983(OP)

I ran local models on my 13th i5. 65 watts peak. Ok for LLM use. Not fast. Image generation was not good.

I want to try to those new AMD AI cpus with npu's built into motherboard.

tGbf4Z

No.2990

>>2983(OP)

And while id love a 12 gb graphics कार्ड, thr costs even for a 3050 are high